Table of Contents

Introduction

Operations research (OR) is a vast area comprising a lot of theory, different branches of mathematics, and too many applications to count. In this post, I will try to explain why it can be a little disconcerting to explore at first, and how to start investigating the topic with a few references to get started.

Keep in mind that although I studied it during my graduate studies, this is not my primary area of expertise (I’m a data scientist by trade), and I definitely don’t pretend to know everything in OR. This is a field too vast for any single person to understand in its entirety, and I talk mostly from an “amateur mathematician and computer scientist” standpoint.

Why is it hard to approach?

Operations research can be difficult to approach, since there are many references and subfields. Compared to machine learning for instance, OR has a slightly longer history (going back to the 17th century, for example with Monge and the optimal transport problem) For a very nice introduction (in French) to optimal transport, see these blog posts by Gabriel Peyré, on the CNRS maths blog: Part 1 and Part 2. See also the resources on optimaltransport.github.io (in English).

. This means that good textbooks and such have existed for a long time, but also that there will be plenty of material to choose from.

Moreover, OR is very close to applications. Sometimes methods may vary a lot in their presentation depending on whether they’re applied to train tracks, sudoku, or travelling salesmen. In practice, the terminology and notations are not the same everywhere. This is disconcerting if you are used to “pure” mathematics, where notations evolved over a long time and is pretty much standardised for many areas. In contrast, if you’re used to the statistics literature with its strange notations, you will find that OR is actually very well formalized.

There are many subfields of operations research, including all kinds of optimization (constrained and unconstrained), game theory, dynamic programming, stochastic processes, etc.

Where to start

Introduction and modelling

For an overall introduction, I recommend Wentzel (1988). It is an old book, published by Mir Publications, a Soviet publisher which published many excellent scientific textbooks Mir also published Physics for Everyone by Lev Landau and Alexander Kitaigorodsky, a three-volume introduction to physics that is really accessible. Together with Feynman’s famous lectures, I read them (in French) when I was a kid, and it was the best introduction I could possibly have to the subject.

. It is out of print, but it is available on Archive.org

. The book is quite old, but everything presented is still extremely relevant today. It requires absolutely no background, and covers everything: a general introduction to the field, linear programming, dynamic programming, Markov processes and queues, Monte Carlo methods, and game theory. Even if you already know some of these topics, the presentations is so clear that it is a pleasure to read! (In particular, it is one of the best presentations of dynamic programming that I have ever read. The explanation of the simplex algorithm is also excellent.)

If you are interested in optimization, the first thing you have to learn is modelling, i.e. transforming your problem (described in natural language, often from a particular industrial application) into a mathematical programme. The mathematical programme is the structure on which you will be able to apply an algorithm to find an optimal solution. Even if (like me) you are initially more interested in the algorithmic side of things, learning to create models will shed a lot of light on the overall process, and will give you more insight in general on the reasoning behind algorithms.

The best book I have read on the subject is Williams (2013)

. It contains a lot of concrete, step-by-step examples on concrete applications, in a multitude of domains, and remains very easy to read and to follow. It covers nearly every type of problem, so it is very useful as a reference. When you encounter a concrete problem in real life afterwards, you will know how to construct an appropriate model, and in the process you will often identify a common type of problem. The book then gives plenty of advice on how to approach each type of problem. Finally, it is also a great resource to build a “mental map” of the field, avoiding getting lost in the jungle of linear, stochastic, mixed integer, quadratic, and other network problems.

Another interesting resource is the freely available MOSEK Modeling Cookbook, covering many types of problems, with more mathematical details than in Williams (2013). It is built for people wanting to use the commercial MOSEK solver, so it could be useful if you plan to use a solver package like this one (more details on solvers below).

Theory and algorithms

The basic algorithm for optimization is the simplex algorithm, developed by Dantzig in the 1940s to solve linear programming problems. It is the one of the main building blocks for mathematical optimization, and is used and referenced extensively in all kinds of approaches. As such, it is really important to understand it in detail. There are many books on the subject, but I especially liked Chvátal (1983) (out of print, but you can find cheap used versions on Amazon). It covers everything there is to know on the simplex algorithms (step-by-step explanations with simple examples, correctness and complexity analysis, computational and implementation considerations) and to many applications. I think it is overall the best introduction. Vanderbei (2014) follows a very similar outline, but contains more recent computational considerationsFor all the details about practical implementations of the simplex algorithm, Maros (2003) is dedicated to the computational aspects and contains everything you will need.

. (The author also has lecture slides.)

For more books on linear programming, the two books Dantzig (1997), Dantzig (2003) are very complete, if somewhat more mathematically advanced. Bertsimas and Tsitsiklis (1997) is also a great reference, if you can find it.

For all the other subfields, this great StackExchange answer contains a lot of useful references, including most of the above. Of particular note are Peyré and Cuturi (2019) for optimal transport, Boyd (2004) for convex optimization (freely available online), and Nocedal (2006) for numerical optimization. Kochenderfer (2019)

is not in the list (because it is very recent) but is also excellent, with examples in Julia covering nearly every kind of optimization algorithms.

Online courses

If you would like to watch video lectures, there are a few good opportunities freely available online, in particular on MIT OpenCourseWare. The list of courses at MIT is available on their webpage. I haven’t actually looked in details at the courses contentI am more comfortable reading books than watching lecture videos online. Although I liked attending classes during my studies, I do not have the same feeling in front of a video. When I read, I can re-read three times the same sentence, pause to look up something, or skim a few paragraphs. I find that the inability to do that with a video diminishes greatly my ability to concentrate.

, so I cannot vouch for them directly, but MIT courses are generally of excellent quality. Most courses are also taught by Bertsimas and Bertsekas, who are very famous and wrote many excellent books.

Of particular notes are:

- Introduction to Mathematical Programming,

- Nonlinear Optimization,

- Convex Analysis and Optimization,

- Algebraic Techniques and Semidefinite Optimization,

- Integer Programming and Combinatorial Optimization.

Another interesting course I found online is Deep Learning in Discrete Optimization, at Johns Hopkins It is taught by William Cook, who is the author of In Pursuit of the Traveling Salesman, a nice introduction to the TSP problem in a readable form.

. It contains an interesting overview of deep learning and integer programming, with a focus on connections, and applications to recent research areas in ML (reinforcement learning, attention, etc.).

Solvers and computational resources

When you start reading about modelling and algorithms, I recommend you try solving a few problems yourself, either by hand for small instances, or using an existing solver. It will allow you to follow the examples in books, while also practising your modelling skills. You will also get an intuition of what is difficult to model and to solve.

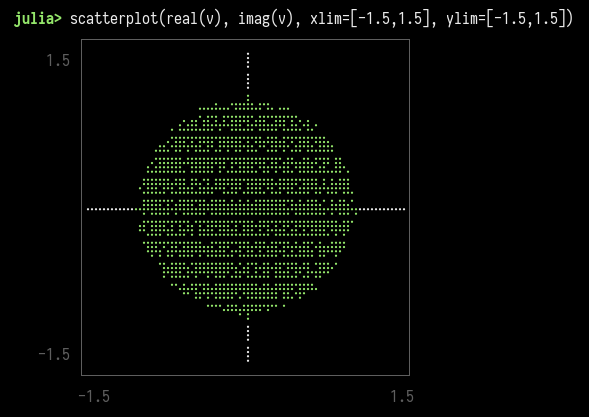

There are many solvers available, both free and commercial, with various capabilities. I recommend you use the fantastic JuMP

library for Julia, which exposes a domain-specific language for modelling, along with interfaces to nearly all major solver packages. (Even if you don’t know Julia, this is a great and easy way to start!) If you’d rather use Python, you can use Google’s OR-Tools or PuLP for linear programming.

Regarding solvers, there is a list of solvers on JuMP’s documentation, with their capabilities and their license. Free solvers include GLPK (linear programming), Ipopt (non-linear programming), and SCIP (mixed-integer linear programming).

Commercial solvers often have better performance, and some of them propose a free academic license: MOSEK, Gurobi, and IBM CPLEX in particular all offer free academic licenses and work very well with JuMP.

Another awesome resource is the NEOS Server. It offers free computing resources for numerical optimization, including all major free and commercial solvers! You can submit jobs on it in a standard format, or interface your favourite programming language with it. The fact that such an amazing resource exists for free, for everyone is extraordinary. They also have an accompanying book, the NEOS Guide, containing many case studies and description of problem types. The taxonomy may be particularly useful.

Conclusion

Operations research is a fascinating topic, and it has an abundant literature that makes it very easy to dive into the subject. If you are interested in algorithms, modelling for practical applications, or just wish to understand more, I hope to have given you the first steps to follow, start reading and experimenting.

References

Bertsimas, Dimitris, and John N. Tsitsiklis. 1997. Introduction to Linear Optimization. Belmont, Massachusetts: Athena Scientific. http://www.athenasc.com/linoptbook.html.

Boyd, Stephen. 2004. Convex Optimization. Cambridge, UK New York: Cambridge University Press.

Chvátal, Vašek. 1983. Linear Programming. New York: W.H. Freeman.

Dantzig, George. 1997. Linear Programming 1: Introduction. New York: Springer. https://www.springer.com/gp/book/9780387948331.

———. 2003. Linear Programming 2: Theory and Extensions. New York: Springer. https://www.springer.com/gp/book/9780387986135.

Kochenderfer, Mykel. 2019. Algorithms for Optimization. Cambridge, Massachusetts: The MIT Press.

Maros, István. 2003. Computational Techniques of the Simplex Method. Boston: Kluwer Academic Publishers.

Nocedal, Jorge. 2006. Numerical Optimization. New York: Springer. https://www.springer.com/gp/book/9780387303031.

Peyré, Gabriel, and Marco Cuturi. 2019. “Computational Optimal Transport.” Foundations and Trends in Machine Learning 11 (5-6): 355–206. https://doi.org/10.1561/2200000073.

Vanderbei, Robert. 2014. Linear Programming : Foundations and Extensions. New York: Springer.

Wentzel, Elena S. 1988. Operations Research: A Methodological Approach. Moscow: Mir publishers.

Williams, H. Paul. 2013. Model Building in Mathematical Programming. Chichester, West Sussex: Wiley. https://www.wiley.com/en-fr/Model+Building+in+Mathematical+Programming,+5th+Edition-p-9781118443330.